Game Monetization, Part I - Lessons of History

One of the core beliefs we have at MetaPortal is that to come up with creative, new solutions to problems you have to study history - past attempts, models - and have an understanding of many other fields of expertise where one can import knowledge from.

To this end, to solve the issues of crypto game monetization and sustainability today, we’ve decided to look into granular details of the history of game monetization in conjunction with our research into macroeconomics, governance (this constitutes the latter portion) with the goal of creating a step change in crypto game understanding.

Table of Contents

Arcade Era Games

Premium-Priced Games

Subscription

DLCs

Sales Cut

Free-2-Play

Conclusion

This piece will be separated by the different distinct ways games are monetized, then further partitioned (if applicable) into chronological, platform-specific, or geographical segments.

Part 1 will be a retrospective piece, highlighting the key milestones in the different ways that games have historically monetized. Part 2 delves into new schools of thought on how NFTs and blockchains can be a value add to player experiences without over-financialization while still offering new (and perhaps better) ways to monetize.

Note, the content here emphasizes more how individual games are monetized, rather than on a distribution/publisher level (ie. not the Steam store).

Arcade Era Games

The first computer game ever created was a little program called Spacewar! by MIT students for the PDP-1 mainframe in 1962. The system alone cost 120,000 USD in 1960, was only available to select universities plus research facilities, and running the program consumed the device’s entire processing power. Albert Kuhfeld who played the game at MIT later wrote reminiscently about it in Analog Magazine:

“The first few years of Spacewar at MIT were the best. The game was in a rough state, students were working their hearts out improving it, and the faculty was nodding benignly as they watched. the students learning computer theory faster and more painlessly than they'd ever seen before... And a background of real-time interactive programming was being built up", (perhaps indicative of the stage we are at with on-chain games/crypto native games today).

It was not until the early 1970s that Nolan Bushnell saw it on Stanford’s PDP-6 and understood its potential to be commercialized. He and Ted Dabney recreated the game on a video machine and called it Computer Space, which predates Pong by almost a year as the first commercially available video game in 1971. These games were built into specialized hardware arcade machines that could only run one specific game.

It is important to remember that coin-operated, pay-to-play games are nothing new in the Western world and Japan:

“...coin-operated machines [electro-mechanical games] had been popular in America for decades by the time [Nolan] got his start in the early '70s, and the pinball arcade had a storied (and notorious) spot in American history.”

The entire business model did not change between electric-mechanical games and arcade games, but rather than launching a metal ball in a box, it was now shooting rectangle laser beams on a 2D screen. Manufacturers like Atari or Sega would first develop a game and build the respective machine for it, sell it to regional wholesalers who then sell/lease it to local shops that wanted the arcade machines. The arcade owners would then pocket all the quarters earned.

While seemingly an unfair model to contemporary gamers accustomed to f2p, the quarter slotting pay-per-play model was a necessity for this situation to work. Between the:

High up-front costs for all parties involved

High execution risk for the arcade owner as they had to own and provide the hardware

Will there be traffic? Will people around this area be interested in Arcades and see my shop?

Rent a physical location which means taking on overhead and other associated costs like maintenance

Is the arcade game I bought popular and trendy?

Physical size and technological limitations of the machine meant only 1 or 2 people could play at a time, so there was a monetizable limitation of player base

People are accustomed to it historically from carnivals and EM machines

…meant this was the most logical way to monetize arcade machines. The coin-insertion monetization also meant that game-makers had the profit incentive to make the game as hard as possible/unbeatable so gamers would keep coming back. Between this profit incentive and the limitation of the storage space tech, game makers had to recycle the same content (sprites, maps) but up the difficulty instead to stretch playtime.

When Space Invaders was released in 1978 the arcade truly hit its stride. To this day, Space Invaders still easily ranks within the top 5 highest earning games ever made in LTV, even after accounting for insufficient information and information lag for more recent titles.

By the 1980s arcades had reached their golden age. Popular games like Pong, Space Invaders, Tetris, and Pac-Man will forever be remembered as the original pioneers of gaming. The industry ballooned to $5B in revenue by 1981, but with the gradual improvement in console and PC hardware, arcades began dying a slow death by the early 1990s before finally disappearing in the early 2000s.

A crucial thing to note is the cross-section between how monetization, game design, technology, and distribution medium can affect each other. If there is one takeaway from this article, it would be this principle.

In arcade games’ case, the arcade machines (how they were distributed and the technology itself) shaped game design into including game mechanics like health bars and ammo and impossible difficulty in order to be monetizable through the pay-per-play model.

Premium-Priced Games

While arcades and games influenced by the arcade design began to die down by the early to late-1980s, the rise of more powerful and dedicated home consoles and PCs began to take shape. This era defined how games monetize themselves up to this very day.

Console

Interestingly, it was not Atari that created the first home console despite their success with arcades. Seeing Atari’s success with mall arcades, Magnavox decided that they could emulate that success but capture the home market instead. In a risky gamble, they created the Magnavox Odyssey, which launched 12 games in 1972.

Despite seeing the massive potential, Atari launched their hit arcade game Pong on the Atari Pong Home Console a bit later in 1975. Its popularity sparked a technological and content arms race between big players like Coleco, Magnavox, and Atari as they now saw the potential of this market. Some looked for technological innovations, like Fairchild’s Channel F console, which was the first to include swappable cartridges instead of only built-in games.

Selling cartridges became the repeating point-of-sale that game companies were looking for and quickly became the industry standard. Henceforth, consoles + their respective physical games follow the razor blade model. Games in this era cost anything between ~$15 to $80 dollars (not adjusted for inflation) depending on the quality and IP, but it was common for people to buy bundle deals at electronic stores secondhand for much cheaper.

Unfortunately, this arms race and tsunami of innovations culminated in a total loss for everyone: The 1983 Western Game Crash. In the course of 2 years from 1983 to 1985, home video game revenues peaked at around $3.2 billion in 1983, then fell to around $100 million by 1985 (a staggering 97% drop).

To people then, it seemed like the video game industry was dead and gone, a passing fad that burned bright but quick. Fortunately, Japanese companies like Nintendo and Sega saw this as an opportunity to enter the Western market. This sparked a global rivalry between the 2 Japanese giants that led to the creation of amazing devices like the NES and Sega Genesis, and titanic IPs like Super Mario (1983) and Legend of Zelda (1986).

Nintendo released games that cost anything between $40-$80 up until the early 1990s but eventually settled for $60 (not adjusted for inflation).

The Nintendo $60 price tag got adopted by every major publisher for their big-budget games and became associated by players as the gold standard for quality games (because games made by Nintendo were so consistently good). This held true up until “Sony helped drive costs down with the 1994 introduction of the PlayStation and its games printed on compact disc, which was less expensive to produce”. That ushered in the era of the $50 game, which continued with Microsoft’s Xbox in 2001. The $50 price tag stayed that way until the mid-2000s with the PS3 and Xbox 360 generation when publishers suddenly realized that gamers would be willing to pay $60 again (coincidentally coinciding with the next economic boom).

Prices for AAA games haven’t moved since despite the hugely increased production costs and general inflation, as the $60 dollar price tag represents a mutual promise between publishers and gamers; publishers hold the AAA game developed to a high standard while gamers accept it as a price they are willing to pay for the quality (though some would argue the quality of these AAA have been slipping the past few years and no longer indicative of quality).

The unchanging $60 price tag of AAA games is also a reason why people believe there is the emergence of DLCs, microtransactions, and even gatcha systems in AAA games, as companies want to find new ways to monetize without moving the price tag (GTA V being an infamous one that has earned Take-Two Interactive billions of dollars).

PC

The arrival of computers like Commodore 64, Amstrad PC, and IBM PC signaled the first wave of interest in dedicated quality PC games. Hit games from this era included Sid Meier’s Pirates and Impossible Mission. Similar to consoles, the earliest form of monetization with these games was the sale of individual games purchasable from stores as physical copies of either tape, floppy disks, and eventually CDs.

Over time, the consolidation of the game-making industry into larger studios/publishers and better cross-platform implementations meant that AAA PC games also were subjected to the same $60 psychological price tag. Obviously, the $60 tag didn’t apply to all games even from the big publishers like EA and Activision, as the price depended on factors like the IP itself, game genre, target demographic, production cost, etc. A small but incredibly important point to remember is that physical games (cartridges/CDs for both console and PC) have a large second-hand market; because they are physical commodities, the resale of household commercial products as an individual is protected by the laws of most countries. Unfortunately, developers and publishers could not monetize this sale, and has always been a sore spot for game-makers.

From here on, premium PC games have remained relatively unchanged in how they are monetized (flat $X), and just depended on whether it was a large AAA game that cost $60 or a small Indie game made by 3 man team which cost $20.

However, the rise of digital distribution platforms like Steam has shifted the relationship between players and their games despite the same premium purchase monetization model. This last but monumental shift in how players acquire their games came in the form of platform-based digital distribution, the consequences of it being something we are still witnessing play out to this day (this applies to consoles too). While the premium monetization principle has not changed for off-the-shelf games, the rights that players have over their games have drastically changed. Notably, games sold on digital platforms are just licenses that allow an individual to access them on the platform (DRM), so the player never owns the underlying game. Since there is no ownership, the aforementioned market of pre-owned games is shrinking by the day as more games are sold online. With laws possibly shifting as regulators better understand the digital distribution and DRM systems, we are entering uncharted territory for game ownership.

Subscription

Subscription-based games have always been synonymous with MMOs. Much like the earlier principle of how monetization mutually affected the game design of arcade games, MMOs and their different operational requirements drove the necessity for a subscription-based game. Subscription services are also commonly thought of as the first iteration of the model of Games-as-a-Service (GaaS), as they can be easily layered on top of other types of monetization.

Platform-Based

The subscription services surprisingly first began way back in the earliest-console days, when Mattel allowed subscribers to its PlayCable program to download new games into their Interllivison consoles through the use of TV cables in the early 1980s (shortly discontinued after the crash of 1983).

The Japanese Giants Nintendo and Sega then dipped their toes into the market in the early 1990s. The Satellaview for the SNES offered Japanese subscribers over 114 games via a satellite connection, featuring both remastered NES and SNES games. Meanwhile, Sega introduced the Sega Channel, which offered subscribers a monthly rotating roster of 70+ games via a cable television adapter. However, due to technological limitations affecting user experience negatively, neither project truly stood out (Sega tried again later with their Dreamcast console with SegaNet/Dreamarena service, but failed due to technological limitations again).

Where Sega failed, Xbox succeeded. Xbox launched the Xbox Network (formerly Xbox Live) in 2002 for the original Xbox. Xbox Live is the underlying service with the achievements, messaging applications, and infamous Xbox trophies. The key selling points of this subscription service were:

Allowed players to play online multiplayer

One-stop shop for all game-related things on the Xbox platform

Trackable achievements and rewards for different games

Digital way to store games and game items

By then designing/welcoming games to the platform that had core experiences locked behind multiplayer (Halo!!!), it funneled players into purchasing a subscription. It wasn't until very recently (April 2021) that Xbox lifted the requirement for some multiplayer games that are Free-to-Play like Fortnite to not require Xbox Live Gold to be able to play, which demonstrates the willingness for players to access multiplayer.

Playstation later joined with their Playstation Network (PSN) and PSN Plus service in 2006 which offered a similar 2-tiered service for games on their platform like Xbox’s. In 2012, the PSN Plus service added the ability for players to play a rotating number of old PS games, which Xbox quickly emulated by adding it to their Live Gold Service with old Xbox Games. Several years later, Xbox revamped its strategy and launched Xbox Game Pass which included not only Microsoft’s games but also games from other major publishers who were willing to sign on.

Individual Games

Contrary to consoles where the focus was on offering multiple games/services with subscriptions due to the oligopolistic grip publishers and console makers had, PC game subscriptions were usually more focused on individual games thanks to the greater flexibility PC could offer.

While MUDs (Multi-User Dungeons) have been around since 1975 and ran on expensive mainframes like PDP-10 (Similar to Spacewars!), the first commercialization attempt began with the Island of Kesami, a rouge-like game that charged an eye-watering $12/hour. These communities were still minuscule compared to later MMOs as they were technologically/cost limited but offered a glimpse into the future potential of multiplayer games.

The first true Internet-based MMO was Meridian 59, launched by 3DO in late 1996. Meridian 59 was the first 3D graphics MMO and was one of the longest-running original online role-playing games. While it was not as successful as its predecessors, it certainly left its mark as the “first” of many things in the gaming world.

However, it was none other than Ultima Online and subsequently Ever Quest that propelled MMORPGs into the mainstream of the gaming consciousness. Many attribute the success of MMOs to being “chat rooms with a graphical interface”, the novel social element was the primary ingredient to their success. The success of earlier MMOs like Ever Quest and Ultima caused many studios to try and emulate them in the early 2000s, but not many were able to deal with the sheer complexity of MMOs. Emerging from the struggle are giant household names like Eve Online, Second Life, and of course World of Warcraft.

While each game was big in its own right in this era, World of Warcraft truly towered over the rest. The Warcraft IP was already a well-established brand that had an intricate universe with a plethora of lore from the games, books, and other mediums to draw from. At its height, it boasted a whopping 12 million subscribers in 2010.

These subscription-based MMOs initially justified their $10 or $15 per month cost with server maintenance and the promise of regular content updates/live events every 2-3 months; and for the most part, it was an excellent symbiotic relationship between players and developers. Larger game-changing additions to the game required players to buy an expansion pack, like WoW’s many expansion packs over the years.

This model worked well for a while until the emergence of microtransactions, popularized by advancements in the F2P model and the mobile game space. In the early 2010s, a multitude of MMOs added cash shops where players could purchase unique cosmetics such as armor cosmetics or mounts with fiat currency (WoW launched theirs in 2013), and some even went entirely Free-To-Play (F2P). While microtransactions allowed companies to better monetize, they also acted as a double edge sword that slowly eroded player trust due to concerns of Pay-To-Win(P2W) and content erosion (will go more in-depth below in the free-2-play section).

DLCs

DLCs have been around for much longer than most people realize; they existed in the form of purchasable new card packs or tabletop maps that players could buy to expand the original set since the early 1970s. As the transition to video games began, game makers saw the same monetizable scheme and adapted it to the new medium called Expansion Packs. Expansion Packs were add-ons that added substantial content like new gameplay systems, maps, or more to the base game that wholly changed how a game was played. For example, Brave New World’s addition of the Ideology system completely altered how late game Civ 5 played. Over time, due to developments in in-game technology, eroding player standards, and rising costs, DLCs now generally include:

New features, like extra characters, levels, and challenges

Items that help you progress through the game, such as weapons and power-ups

Cosmetic extras, such as character outfits and weapon skins

Season passes that grant early access to upcoming DLC

DLCs nowadays are seen in a more negative light, simply due to the fact that many companies simply no longer put the effort in to justify their price tags.Also, DLCs have been increasingly associated with F2P microtransactions despite a more defined separation in the past, primarily due to the aforementioned reasons and smaller price tags to entice people to pay.

PC

Early DLCs on PCs came in the form of purchasable CDs that could be read in order to access new content on the original game. Some games even offered DLCs for free such as Total Annihilation, an RTS game launched in 1997 that released new playable downloadable units over the Internet every month. Interestingly, some speculate adding in DLCs was an early way to encourage players to not sell their games secondhand.

However, it wasn’t long that DLCs soon gained a bad reputation due to a multitude of methods developers and publishers took to better monetize their games.

The then-pricey Bethesda $2.50 Horse Armor is the single most cited reason where a DLC implementation has gone wrong. TLDR, Bethesda essentially wanted to cash in on the free player-made skin market with something official of their own, but Oblivion was a single-player game that made a purely cosmetic DLC make little to no sense (cosmetic DLC is usually a way to show off in a social context). This act made players riot, but despite the outrage, it worked out pretty well for Bethesda.(This horse armor was so cursed that it is memed by Bethesda themselves 10+ years later).

Even worse was the emergence of “On-Disc DLCs”, where the content physically exists on the disk but is now locked behind a digital paywall in order to further nickel and dime players.

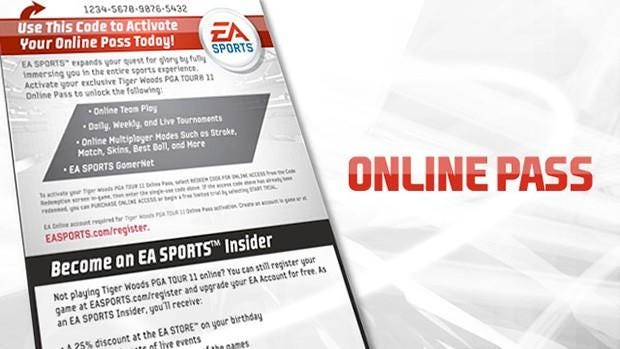

Another way companies began to tighten monetization efforts was to ensure physical games weren’t being sold secondhand. The most egregious of this phenomenon happened in some online games by select publishers in the form of “Online Passes” in the late 2000s/early 2010s that behaved similarly to a DLC. Gamers had to buy a new game in order to receive a single-use serial number that unlocked multiplayer features that would be permanently tied to that 1 account. Anyone who bought a secondhand multiplayer game that had this system had to pay the publisher for a reactivation code (usually ~$10). This massively slowed down 2nd hand sales for these multiplayer games and completely killed rental programs overnight. To no one’s surprise, EA was the strongest proponent for this implementation and included it in a whole slew of their sports games like NHL 11, PGA 11, and Madden NFL 11 (and of course, Ubisoft quickly followed suit).

Thankfully, gamers around this time unironically rose up and massively protested against this tactic. Eventually, EA and others backed off several years later and removed the Online Pass system, marking one of the depressingly few victories the gaming community has scored against anti-consumer behavior from game companies.

Unfortunately, the rise of digital distribution has made the partitioning and separation of content through DLCs easier, more frequent, and seamless, which has numbed players to become more accepting of it. Combined with the fact that games aren’t resellable on digital platforms many would argue that the gaming industry is the ultimate winner in this fight.

Console

Unbeknownst to many, downloadable content has been around since the late 1970s. Namely, the GameLine service for the Atari 2600 allowed subscribers to download games temporarily using a telephone line. However, the service was already struggling even before the 1983 video game crash due to a lack of product-market fit, high pay-per-play cost, and poor user experience, so when the 1983 crash came Gameline was completely wiped out.

Things did not change much for consoles until the advent of Xbox Network (formerly Xbox Live), with the idea of DLCs first implemented in the OG Xbox, but truly flourished and fleshed out under the Xbox 360. The Xbox Store on the Xbox Network featured robust methods to transact and buy anything from tiny $1-$5 cosmetic deals to whole $20 expansion packs for Halo maps. Many cite this service as the first appearance of microtransactions as we know them today. They also pioneered the usage of store points with their then “Microsoft Points”, which required users to buy physical gift cards to earn them to avoid banking fees, something that every video game distribution platform like Steam or EA has copied since.

Sales Cuts

Implementing sales tax on tradable goods is an act as old as time, so it's no surprise there are similar implementations in the gaming world.

This method of monetization is more unique compared to the rest so far, as it generally only works when 3 requirements are satisfied (in descending order of priority):

Game devs have a stable and controllable platform on which to run the marketplace (think proprietary web 2), with the ability for players to have an in-game wallet that can be loaded with fiat currency to transact with and store value

The platform has a high population game(s) that has mechanics that encourage players to engage in the marketplace (generally cosmetic elements)

Items gainable from playing the game are usually randomized with different levels of rarity

(As aforementioned, this article is less about platforms and more about individual games, but the power to monetize a genre-defining or an ever-green game is certainly nothing to scoff at.)

Historically, players have traded offline for in-game items. This constitutes RMTing, and it is why you see accounts or online items being auctioned off on eBay or shady-looking sites that are willing to facilitate trade between players. Even in the earliest MMOs, people were willing to engage in marketplaces with real-world currency to get what they wanted. Unfournately, due to the risk of copyright infringement and disruption of in-game economies, eBay officially banned the sale of digital goods from online games in 2007. There were only a few exceptions made, Second Life being a notable one because:

It was treated as a simulation rather than a game (because it had no end objective or “end-game” gear)

In-game objects created for Second Life are treated as the property of the player rather than the game, therefore having full rights over their items

This point is where we believe crypto can be a real value add thanks to the unique properties of blockchain and will be addressed in Part 2.

Trading of in-game items was especially apparent in games that had extensive cosmetic libraries, which was the case for Valve’s title Team Fortress 2. Rather than shying away like other developers, Valve wanted to embrace and monetize the trading of digital goods instead with some tweaks. Luckily they not only had foresight but the tools to do it too; a great game and a large platform to facilitate this endeavor. Valve launched the Steam Marketplace in 2012, knowing that there was a booming trading economy of the in-game hats existing outside of the game (everyone knows you can’t play the game without wearing a hat). Combined with their Steam Workshop (a user-generated content sub-platform launched in 2011 that allowed players to create skin, mods, and map creation for select games), it was an instant success. While trading of items started off with Steam’s official games like TF2 and CSGO with their respective items, other games from third-party developers on Steam either added their own content to be tradeable or even allowed players to sell self-made content (at the discretion of game creator + Valve) to be sold on the Marketplace.

The Marketplace included a ~15% buyer fee, which was reasonable enough to deter market arbitrageurs while not too taxing that disheartened the players from participating in the trading. While the fee can be subject to change, generally 5% goes to Steam and 10% goes to the publisher (so for CSGO and TF2 Steam gets the whole package). By introducing an official marketplace for players to transact on, Steam protected players from shady deals on black markets, while simultaneously creating an undying source of revenue for both themselves and third-party developers.

By 2015, Steam reported that it had paid out more than $57 million to community creators alone.

Free-2-Play (F2P)

Free-2-Play is a whole new beast that truly propelled gaming into the consciousness of the general public, and attracted an entirely different demographic separate from preceding generations of gamers. The mobile market exceeded all expectations regarding global player count and revenue generated and continues to grow at a stunning pace today.

Shareware

Shareware was a model that provided paid users the ability to distribute a version of the software that had limited capabilities or restricted content behind paywalls. This model was a way to combat piracy for productivity software from the mid-1990s to the early 2000s, but also made users distributors of a product they condoned which was an effective sales method. A popular game that utilized this distribution was the original Doom. While technically parts of the game were playable for free, it still required someone to pay for the full game beforehand, and for free users to pay and unlock the rest for the developers to monetize the game. Shareware discs were available at stores, and players could send codes to their friends to use and try out. If they liked it, they could have a physical copy mailed to them after mailing a cheque to the publisher.

East Meets West

The first F2P games originated from a slew of successful MMOs in the West that targeted children such as Furcadia and Runescape. In the early years, they had limited ability to monetize and instead used user-generated content (UGC) and volunteers to keep content fresh for players while sprinkling a freemium model on top for small perks like exclusive servers and cosmetics to monetize.

Meanwhile, in the East, Nexon released a game called QuizQuiz in 1999. The game was an MMO quiz game similarly targeted at kids that had cosmetic microtransactions, and it was clear they saw the potential in this model, as they later released Maplestory in Korea in 2003 with the same model. The F2P model resonated (and continues to) very well with the East Asian market. However, these early Asian F2P games were blatantly Pay-To-Win (P2W), but the Asian audience treated it like the norm and was not bothered by the difference.

Seeing the success that the East was having with this new model, Western developers began considering emulating it in both new and existing games but tweaking it so it had no P2W elements. The desire to transition from a subscription-based model to a F2P one was especially apparent in the MMOs genre. The transitions were generally difficult and risky, but the games that were able to do it successfully profited socially and financially like Star Wars: The Old Republic and Rift. Here is what Rift’s Creative Director Bill Fisher had to say after their transition into a F2P game from a subscription one:

“The effect on the player base has been tremendous. Our daily active users jumped around 300% after the free-to-play announcement. There was a significant amount of re-engagement. Players that were inactive for 14 days or more came back at a rate of 900%. It’s staggering,” says Fisher. “Revenue increased more than five times. We’re creating a product that people can fall in love with and not just trying to milk people for dollars. We’re not putting in mechanics to try and trick people out of their money.”

This wave of success spurred even more interest, this time with developers who focused more on creating new games rather than revamping old ones. This momentum, combined with the then-budding MOBA concept, generated games like League of Legends, Dota 2, and Smite that worked on the concept of F2P. League especially demonstrated that with a magnetic core gameplay loop that kept players coming back for more, it was entirely possible to make a billion dollars per year through a free game using F2P monetization strategies.

Rise of Mobile

While this F2P transformation was happening to PC games, the rise of F2P mobile games began around the same time as the advent of smartphones. The earliest phone apps charged you a flat fee to download a game, like $2.99 for Doodle Jump, but through experimentation and iteration, app developers found it was easier and more profitable to monetize the few that truly loved your app rather than charging a premium fee. Charging a flat premium fee decreases the potential user pool substantially. Especially for apps that could reach a wide audience, could have new content regularly (like games), and weren’t a must-have app, it was a no-brainer move to switch to a F2P model.

In the late 2000s, 2 major game makers entered the scene that transformed this space. One was Zynga, whose game Farmville propelled Facebook’s financial growth and traffic into new heights while simultaneously redefining what games are in a controversial manner. Some in the industry argued that Zynga games barely qualified as games. Others compared them to a Skinner’s Box wrapped in the packaging of the game because of how Zynga conditioned players to stay engaged with their apps.

The other was Supercell, whose well-placed marketing and socially focused games would take the world by storm with smash hits like Clash of Clans and Clash Royale. Both giants demonstrated that mobile could reach audiences like Soccer moms or middle-aged white-collar workers that weren’t traditionally seen as “gamers”. This newfound demographic will soon be labeled as either “casual gamers” or “mobile gamers”.

Whaling - The Top 1-10%

This article by Jon Radoff perfectly distills the economics of the F2P model on how and why it works. We highly recommend you give this a read if you have the time.

TLDR, even as in-app purchases in games becomes increasingly normalized, the majority of F2P players will not spend money ever. Therefore, it is important to capture the small percentage of players (so-called “Whales”) who will play using the right IAP tactics and offerings to incentivize those willing to pay more.

As F2P matured, the industry as a whole has refined a bad positive feedback loop where the content is focused on attracting, maintaining, and maximizing the small percentage of those who are willing to pay.

This is not inherently evil nor even unexpected; it is only natural for for-profit companies (especially maximizing public ones like Acti-Blizzard or EA) to turn into this route in order to monetize their player bases. However, this feedback loop has consistently ruined game after game in the search for further monetization, with Diablo Immortal redefining a new low for the industry.

Many in the space believe without regulation, monetization methods will not improve or change. We at Metaportal believe that crypto can offer unique capabilities in incentive alignment to restructure monetization that are both not predatory to the “whales” that pay up, and exclusionary to F2P players. In Part 2, we will address what incentive alignments we can create in games using crypto, how they can change the current landscape of F2P games, and use data to reinforce our hypotheses.

In-app Purchases: Ads, BattlePasses, Gatcha, Lootboxes, and Galore

As mentioned previously, the two main ways F2P games generate revenue are through the sale of cosmetics – targeted towards social players for status purposes and players who truly want to support the game while looking good, and selling convenience to players.

Convenience transactions include:

Paying to skip Ads (and those who don’t pay watch ads so still generating that $$)

Buying exp bonuses to level up faster

Buying insurance so X doesn’t go away after a period of time

Paying to skip content

Buying better gear (P2W territory if the game has PvP)

Beyond just monetizing, these are tactics to drum up player hype and act as player retention mechanics. All these tactics fall under the umbrella of In-App Purchases. As the name suggests, these are purchases that can be made by players seamlessly within the game to enhance their playing experience.

Battle Passes and Skins generally fall under the cosmetic label, but depending on the implementation, Battle Passes offer paying users the ability to unlock not only extra cosmetics but faster exp progression too (so also a convenience spending). Battle passes done right have been demonstrated to be an incredible player retention system.

Lootboxes and Gatcha systems are the usage of RNG for players to obtain in-game content rather than earning it through playing the game or other methods. While they aren't exactly new (think opening a Pokemon card pack), the implementation of these systems in video games has only been on the scene for the last decade. One of the early and definitely most successful implementations in the West was the addition of FIFA Ultimate teams in FIFA 09. In EA’s 2021 SEC filing, they revealed that the Ultimate Team made $1.62B over the 2021 fiscal year, which represented an insane 29% of EA’s entire net revenue for that fiscal year. Notably, loot boxes and gatcha systems recently have begun entering the cross-hairs of regulators around the world, as countries are now considering if opening loot boxes/gatchas is gambling and the effects these systems have on minors. Some have pointed out that this model ironically resembles the pay-per-turn arcade model (minus the legitimate physical constraints of arcades), and that the gaming industry has gone full circle on how to monetize their content.

If there is one takeaway from this section on F2P games, it is that with the right content, live-ops management, marketing, and proper demographic segmentation + targeting, it is more than possible to monetize a small minority of your player base to cover the cost of the remaining free players.

Honorable Mentions

Merchandising

While not every game is able to make it to this step, it is no doubt the end goal for a lot of IPs who make it big enough. Margins on developing branded merchandise, especially for evergreen IPs, are simply too big to pass off. And if you don’t believe me, slap a Pikachu on any random household item and see how much more you can sell it for.

Merchandising used to be only achievable for the biggest IPs from the biggest companies like Nintendo or Microsoft, but thanks to the long tail of the Internet, lowering distribution costs, and proliferation of gamers, any game with a sizable audience can now merchandise and market effectively to their player base.

For example, Deep Rock Galactic is a lovely, labor-of-love Indie game (so certainly no Pokemon) that costs a reasonable $30. While this is only a guess, there is no doubt it has a tight profit margin considering the 30% revenue cut to distribution platforms (Steam, PSN, etc.), the ~20-30%(?) cut to their publisher, 20-30% overhead, and other miscellaneous costs. Meanwhile, here’s an official and generic black hat with the DRG logo on it that costs $25, 100% pocketed by the developers.

IGA

In-game advertising is a niche but interesting way for games to monetize themselves through selling advertising space that blends in with the game naturally so as not to disrupt the player experience (so it is NOT showing irrelevant ads common in many F2P games). Similar to how product placements can be inserted into movies, there are some instances where it makes sense to do it in games. However, IGA is no easy feat to pull off, as you have to consider relevance/contextuality, addressable demographic of the gamers the game attracts, medium of presentation, etc. Hence, many brand deals resort to working with games that are based on realistic settings/drawn from real-world like FIFA billboards from EA. Nevertheless, as more games are developed and more brands become internet-native, it is exciting to see what sort of IGA deals can be pulled off.

Conclusion

Hopefully, this provided a condensed but still comprehensive look at the history of how different games from different eras monetized themselves.

Finding ways to sustainably monetize a business is a demanding endeavor. It is even harder for the game industry, which historically has already found it difficult to price games sustainably while managing player expectations. The past few decades have been a long and gradual process of game companies finding better and better ways to monetize their products, pushing boundaries, and testing the limits of consumers.

To be completely honest, if this piece could be summed up with 1 picture, it would be this:

But it is not all doom and gloom. We believe that next-generation games utilizing blockchain are uniquely positioned to monetize games more sustainably in unforeseen ways through creating more sustainable incentive alignments between developers, publishers, and players.

In Part II, we want to begin exploring those potential monetization methods, drawing from the history lessons learned in this piece.