Metaverse, Inc. (vol. 2) - Extended Reality

A few weeks ago, we started a series called Metaverse, Inc. exploring off-chain investment and development in the Metaverse. Our first piece in this series was introductory and touched on several of the larger players (i.e. Meta, NVIDIA, etc.) building software and hardware in the space. Today, we continue our journey inside Metaverse, Inc. by diving deeper into extended reality.

What is Extended Reality (XR)?

To level-set on terminology, a quick definition of extended reality/XR may be helpful. Generally, XR encompasses any or all of the three existing flavors of semi- or entirely-virtual experiences.

Virtual Reality (VR) – immersive virtual experiences inside artificially created worlds, accessed through specifically designed hardware like VR headsets, glasses, etc.

Augmented Reality (AR) – semi-virtual experiences within the physical world, accessed through specifically designed hardware or through mainstream devices like personal computers, mobile phones, tablets, etc.

Mixed Reality (MR) – a more refined version of AR where the physical world is recreated virtually allowing users to control and interact with all elements they see, accessed through specifically designed hardware like VR headsets, glasses, etc.

As a whole, XR is resulting in new and exciting ways for people to work, learn, play, and simply exist in environments that merge the physical world and the many digital worlds in existence or currently being created. Naturally, all of this has direct application to the budding Metaverse with XR likely to be one of the major ways people access their future digital lives.

The XR ecosystem

The off-chain XR ecosystem is large and growing. According to Pitchbook, more than 1,000 companies are creating the infrastructure, hardware, and content necessary to power immersive, shared virtual experiences. Additionally, building in the space spans nearly all industries with both enterprises and consumers finding valuable applications of XR technology in everything from entertainment to national defense. Furthermore, funding for XR endeavors reached its highest level since 2018 last year with VC’s investing nearly $4 billion in the sector in 2021. Although there is still uncertainty about exactly how a mature XR landscape will look in the future, there is no doubt the technology, usability and quality of XR will continue to expand. Take a look at the below graphic for a quick view of some of the key players in XR.

The science behind XR

Creating immersive 3D experiences and blending virtual and physical worlds is no easy feat. It requires advanced computational technologies, multiple arrays of sensors and fine-tuned visual and auditory feedback. The science behind XR has a long and complex history and goes back a lot further than you might think.

As far back as the 1980s, crude virtual reality technologies were being developed that tracked users' positions in physical space and enabled their shadows to interact when projected on a screen. At around the same time, a wearable VR headset and haptic glove were developed by VPL, a company founded by futurist Jaron Lanier. In 1993, SEGA Systems announced consumer-focused VR glasses for its SEGA Genesis console, but ultimately never mass-produced the device.

Over the last 30 years, vast improvements in XR software and hardware have been made with dozens of companies building technology that will enable the creation of the shared virtual world many early pioneers envisioned. But many interdependent components are still needed to create high quality XR experiences. Not getting some key elements right can result in poor graphics, unresponsive environments, and even physical discomforts like eye fatigue and nausea. To start, every XR experience needs to make efficient use of the following features:

Responsive 3D Rendering: Producing convincing digital worlds requires extremely sophisticated software and large amounts of computing power. Companies like Ziva Dynamics (now part of Unity Software) are working on creating lifelike animations and digital representations of humans that bring a critical feeling of the “real” to virtual reality. Without natural-looking surroundings, characters, and objects, digital environments can remain in the uncanny valley and motivate users to leave XR behind. The below comparison between CGI actors of the early 2000’s and the much-improved digital humans of today is telling about how far we’ve come in this area so far.

Intelligent Computer Vision/Machine Learning: In addition to realistic 3D imagery, computer vision, machine learning and artificial intelligence techniques are required to allow physical and virtual objects to interact. Most mainstream computers rely on rigid or procedural codebases that require some degree of predictability. This approach doesn’t work as well with XR given the movements and next steps of the main characters of the experience (i.e. users themselves) are unknowable beforehand. To help XR software deal with this unpredictability, ML/AI techniques are used to train computers on appropriate responses given changes in inputs. This is what allows digital items–like gear or weapons in a game–to be handled in what feels like a natural way. In simplified form, it’s also what allows games like Minecraft (acquired by Microsoft in 2014) to engineer new areas on a map based on a user’s movement. It also will enable the creation of more realistic bots within games that human players can interact with in increasingly complex ways. Interestingly enough, Minecraft has also spawned research projects focused on developing AI-powered, non-human players to explore the game entirely on their own, building worlds, gathering resources–all without any user input.

Physical Position Tracking: Another necessary element that enables the digital and physical worlds to blend together is real-time position tracking. This is the hardware that allows XR headsets or other devices to accurately position a user in a virtual environment. Inaccurate position sensors can result in glitches like what happened in this video with the Oculus Rift. Bosch Sensortec is one company that develops magnetometers, orientation, and pressure sensors that are essential in determining the positions of VR hardware in real space that can then be mapped onto virtual environments. In other words, these sensors are what tell an XR headset which direction your head is facing, where your hands are, etc. Eye tracking hardware is another new area of development with groups like Pupil Labs building customized XR lenses that track pupil movement, allowing users to interact with games and other virtual environments with nothing but their eyes.

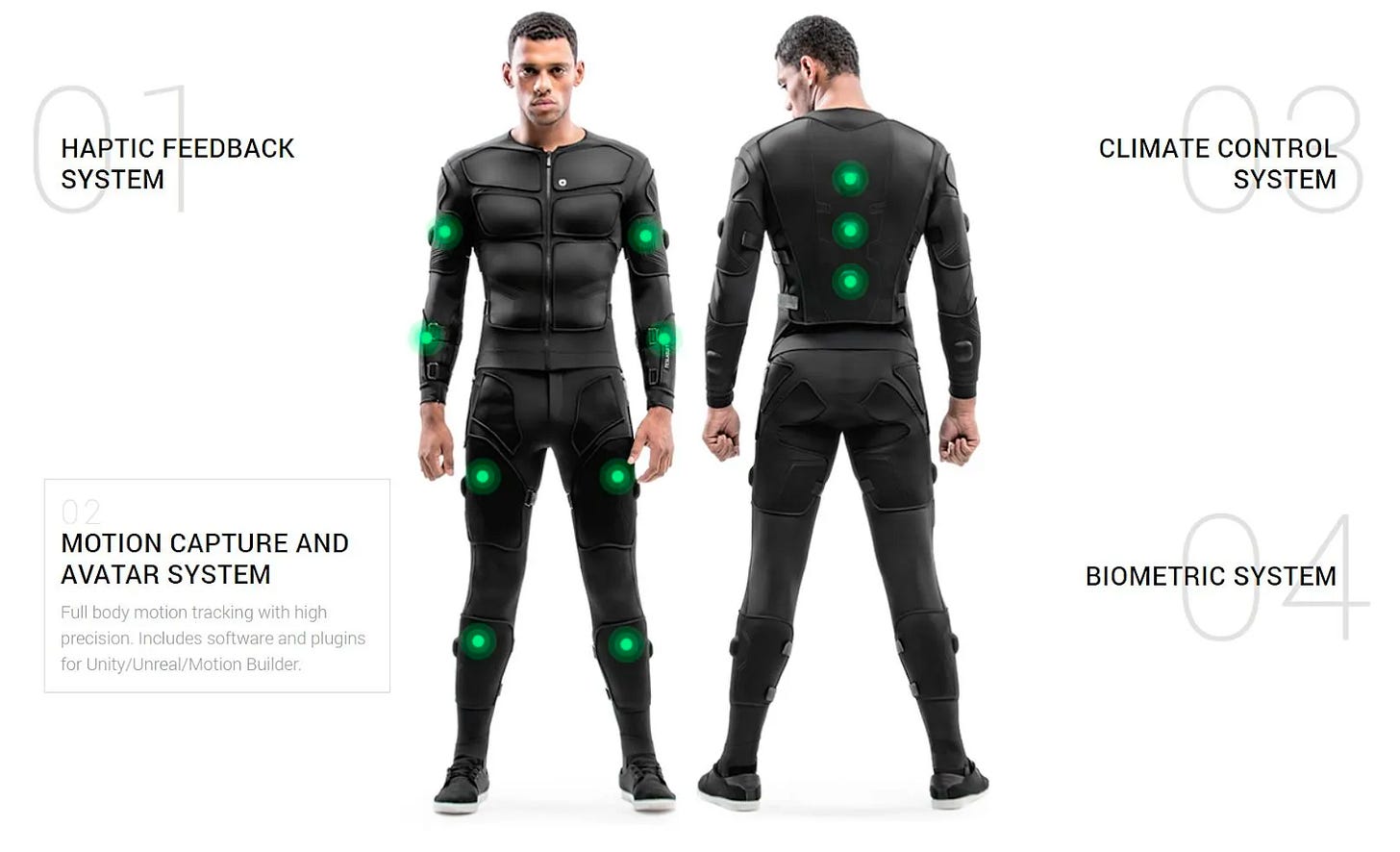

Balanced Sensory Stimulation: Most XR technology focuses on improving visual elements, however being immersed in a virtual world doesn’t only involve what we see. What we hear, feel, and even smell can have a significant impact on the “realness” of digital experiences. The combination of various sensory stimuli also has meaningful impacts on reducing the side effects of prolonged XR exposure which can lead to fatigue, nausea, and other undesirable physical symptoms. Haptic attachments are currently one area of development with groups like VR Electronics going so far as to create an entire haptic suit. The non-visual hardware space is still nascent, but could bring with it some pretty surreal use cases for XR.

Encouraging progress has been made in each of these areas over the past several years and excitement about XR has never been more intense. However, it is likely that much more improvement is needed before XR reaches mainstream adoption. Additionally, most high quality XR hardware, like VR headsets, are still fairly expensive and many require PC connectivity to work best. The Oculus (now Meta) Quest is the closest headset to achieving widespread popularity, but compared to many enterprise-grade setups leaves much to be desired. Despite this, we believe we are likely at an inflection point of innovation given the current momentum in product improvement across headsets–a rising tide that will lift all boats.

Is XR needed for the Metaverse?

All of this brings us to a key question: Is XR necessary in order for the Metaverse to reach its full potential? In our opinion, the answer to this question is nuanced. There are many virtual worlds (arguably most of them) that can be accessed by nearly any device and don’t require an immersive XR experience to be enjoyed. Indeed, it is likely that many early Metaverse participants will access the digital universe outside of XR and it is in the best interests of developers to welcome all to their platforms. For instance, best guesses place the total number of active mobile internet users at around 4 billion. In contrast, it was estimated that only 68 million VR headsets were sold globally in 2020. While the near term portal to the Metaverse is likely to be device agnostic, we feel that the full version of the Metaverse in all of its digital glory will require more immersive hardware to be holistically enjoyed.

Conclusion

At the end of the day, XR remains an extremely exciting area of development and one that touches the cutting of development in many sub-industries. In future editions of Metaverse, Inc., we plan to continue sharing insights about the off-chain companies helping to build the Metaverse. There is a ton to delve into here, so if you have any specific yearnings for knowledge on non-crypto Metaverse topics, feel free to share. Thanks for reading and catch you next time.